Last Updated: February 05, 2026

If you run an AI-first product, you are quickly learning that the hardest part is not getting a demo to work. It is keeping it working when real users arrive, costs start swinging, and your stack evolves weekly. This is where a mobile app development company or a small founding team building the full product hits the same wall. Agents need state, tools, and guardrails. MCP is changing how tools get wired in. Vibe coding is speeding up delivery but also shipping new kinds of production bugs.

The 2026 pattern is clear. AI engineering is turning into systems engineering again, just with LLMs in the loop. The original article on AI engineering trends in 2026 calls out the big shifts. Agentic tech is becoming normal. The Model Context Protocol (MCP) is emerging as a standard way to connect models to tools. And the “vibe coding” wave is expanding the pool of people who can ship software, which changes how teams think about quality, security, and backend choices.

For AI-first startup founders, the practical question is not whether these trends are real. It is how to ship an MVP on limited runway without locking yourself into a backend that cannot handle agent workflows later, or a pricing model that punishes you the moment your app becomes popular.

Trend 1. Agents are moving from demo loops to product workflows

Photo by Andrea De Santis on Unsplash

In 2024, most “agent” projects were basically a chat UI and a tool call or two. In 2026, you can see the shift in how teams talk about them. They are no longer “a chatbot”. They are workflows that must be observable, recoverable, and safe. That is why agent conversations now include terms you used to hear in distributed systems reviews, like idempotency, retries, event logs, and state snapshots.

The moment an agent touches money, user data, or an external system, you inherit the same reliability expectations as any other backend feature. An agent that can draft an email is forgiving. An agent that can file a support ticket, create a GitHub PR, or trigger a payment refund is a production system.

OpenAI’s current guidance reflects this push toward engineering discipline. Their Agents SDK documentation frames agents as orchestrated systems with tools and best practices, not just prompting tricks. See the OpenAI Agents SDK guides for the direction their ecosystem is taking: https://platform.openai.com/docs/guides/agents-sdk

Here is what typically breaks first when teams “graduate” an agent from prototype to product.

The first production problem is agent state, not prompt quality

Founders often start by tuning prompts, then realize the real issue is that the agent cannot remember what it already tried. You need a durable record of what tools were called, what inputs were used, what outputs came back, and what decision the agent made.

In real deployments, you will end up needing:

- A way to store an agent run as an object with a lifecycle. Created, running, waiting, completed, failed.

- A way to store tool calls and results in a queryable form.

- A way to replay or resume after timeouts, user edits, or tool failures.

- A way to separate user-visible “conversation” from internal execution logs.

This is where your backend choice starts to matter. Traditional BaaS setups that are perfect for CRUD apps can become awkward when you need real-time state management for agents, background jobs, and audit logs.

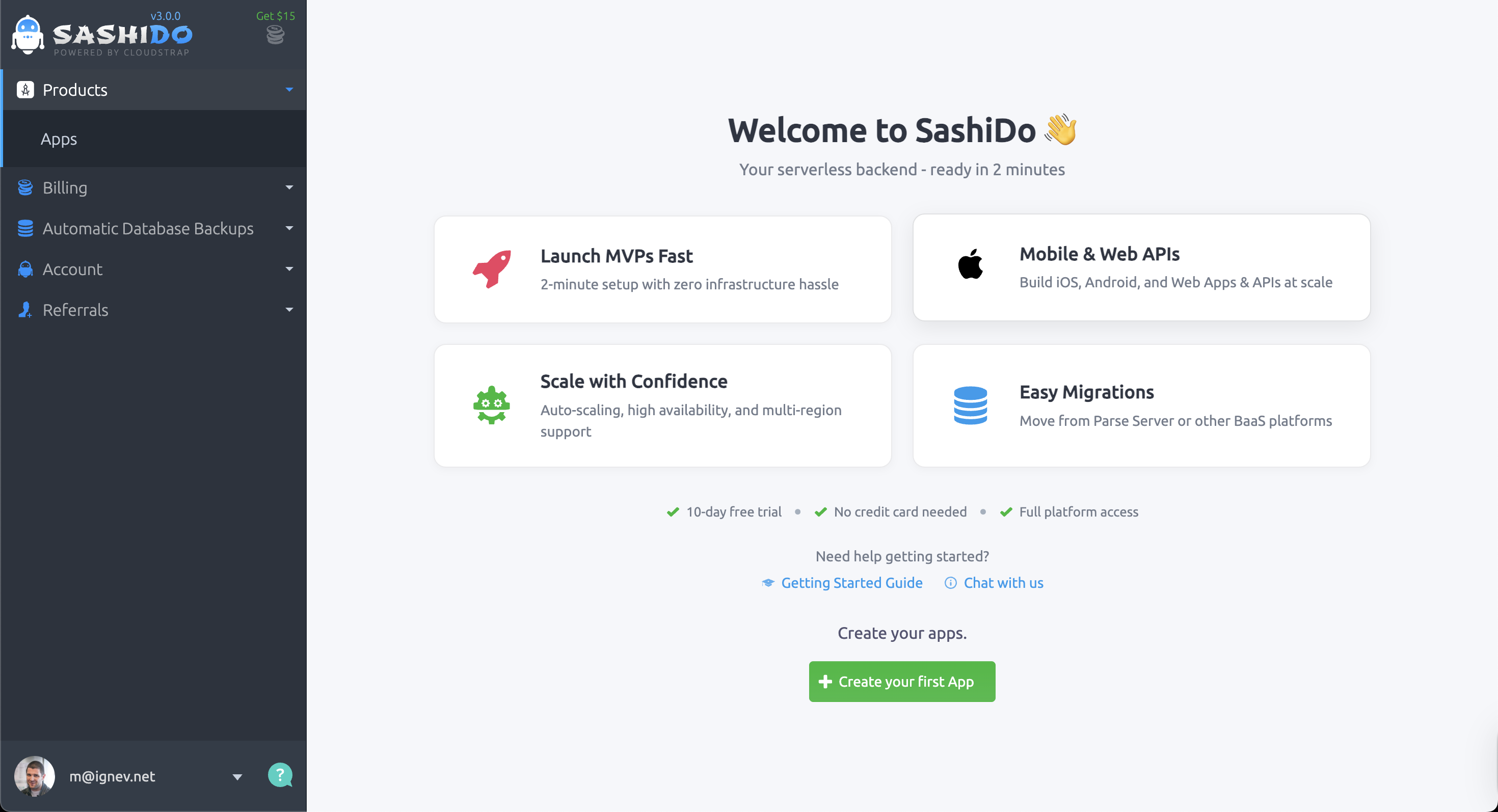

If you want a Parse-based backend that stays portable while you prototype agent state and tool execution, SashiDo - Parse Platform is a practical starting point because you keep the open-source Parse Server foundation while offloading the DevOps overhead.

Agent reliability looks like boring backend work, and that is good

Once an agent can take actions, reliability patterns return fast. You need to think in terms of workflows and compensation.

A common example is a research agent that pulls data from multiple sources and writes a summary back into your product. If one source fails, you do not want to lose the whole run, nor do you want to bill users for partial results without clarity. So you add timeouts, partial checkpoints, and a way to continue later.

In practice, you want a backend that can do three things without you building a platform team:

- Persist state in a way you can query by user, run id, and step.

- Trigger secure server-side execution for tool calls, data enrichment, and scheduled work.

- Expose real-time updates so the UI can show progress, not just a spinner.

That is the “agent backend” requirement. It is closer to building a workflow engine than it is to hosting a chat.

Trend 2. MCP is becoming the connective tissue between models and tools

Tool integration used to be bespoke. Each team had its own wrapper code for calling databases, third-party APIs, internal services, and file systems. In 2026, the industry is converging on a more standard contract.

Anthropic’s Model Context Protocol is positioned as an open standard for connecting AI apps to tools and data sources. The announcement about donating MCP and establishing governance through an industry-backed foundation signals where this is going: shared tooling, shared security expectations, and more interoperability. Source: https://www.anthropic.com/news/donating-the-model-context-protocol-and-establishing-of-the-agentic-ai-foundation

You can also follow the protocol resources at the official MCP site: https://modelcontextprotocol.io/

What changes when you adopt MCP early

MCP is not “just another spec”. It changes how you design your product surface.

Before MCP, teams hardcoded tool choices into agent prompts and orchestration code. When requirements changed, you refactored the agent. With MCP-style tool servers, you can treat tools like plugins. You still need good prompting and evaluation, but the interface becomes stable. That stability matters when you are shipping fast.

The pragmatic benefit for an early-stage team is migration leverage. If you standardize tool boundaries early, you can swap models or vendors later without rewriting every integration.

For AI-first founders, MCP becomes a forcing function to separate:

- Product data plane (what your app stores and serves).

- Tool plane (what agents can call).

- Model plane (which LLM runs the reasoning).

That separation is exactly what reduces vendor lock-in.

MCP plus a real backend: where most teams land

There is a misconception that MCP will “replace backend work”. It does not. MCP helps agents talk to tools. You still need a backend to store users, permissions, files, runs, billing, and the state that makes your product coherent.

The teams shipping smoothly are combining a stable backend platform with MCP tool servers that evolve quickly. That means you want:

- A backend with flexible schema, because agent payloads evolve.

- Server-side functions for tool execution and guardrails.

- Transparent scaling and pricing, because tool calls can spike.

This is where an open-source-based platform is attractive. With Parse Server you keep portability. With managed hosting, you avoid turning into a DevOps team.

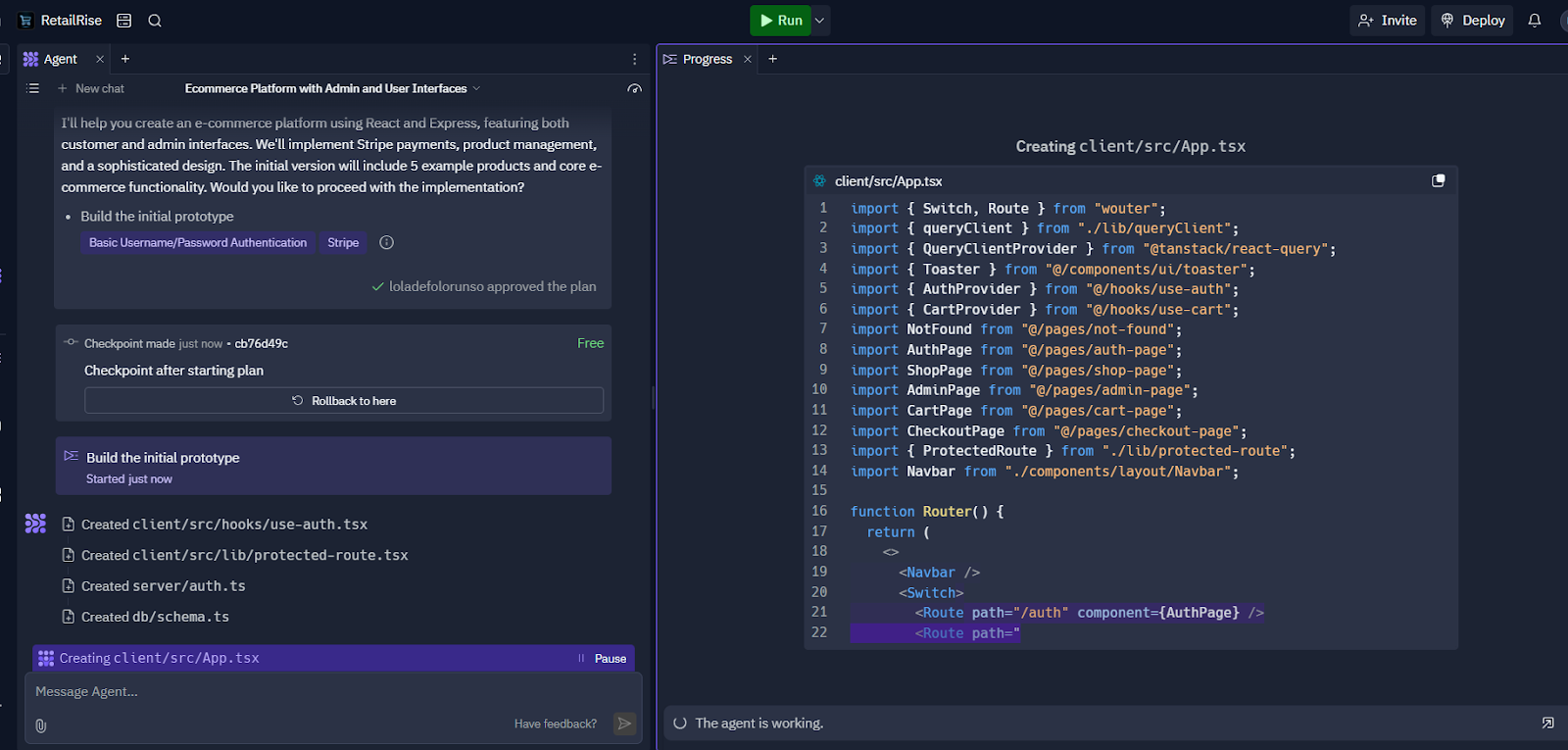

Trend 3. Vibe coding is real, and it changes what “shipping” means

The “vibe coding” term captures what many teams are already seeing. LLM-assisted coding has lowered the barrier to creating working features. More people can ship more code, faster. That includes founders, product engineers, and also non-traditional developers.

The upside is obvious. You can move from idea to MVP quickly. The downside is subtler. The cost of writing code drops, but the cost of operating code stays the same, and often rises.

When more code is produced with less friction, you tend to see:

- More integrations, and therefore more security surface.

- More asynchronous workflows, because agents, jobs, and webhooks stack up.

- More “almost correct” logic that passes a quick test but fails in edge cases.

This is why vibe coding pushes backend teams toward platforms that reduce operational drag.

The tooling wave: IDE agents and repo-level agents

The same year that vibe coding became mainstream, IDEs and repos gained agent-like coding features. GitHub Copilot’s agent mode is a good example of where the developer experience is headed, with agents that can take multi-step actions inside the IDE and beyond. Documentation: https://learn.microsoft.com/visualstudio/ide/copilot-agent-mode

At the repo level, the “coding agent” pattern is also expanding. GitHub documents how to assign tasks to Copilot to create PRs and work through issues. Documentation: https://docs.github.com/en/copilot/using-github-copilot/coding-agent/about-assigning-tasks-to-copilot

For founders, these tools are leverage. But they also amplify a recurring mistake: teams ship the frontend and forget the backend foundations.

If you are building with React Native for app development, vibe coding can get you to a working UI quickly. Many founders can now assemble React projects with a chat-driven workflow. The risk is that the backend becomes a patchwork of managed services, each with its own auth model, pricing curve, and migration tax.

Trend 4. Backend choices are splitting into two camps: locked convenience vs open flexibility

Most early-stage teams choose a backend the way they choose UI libraries. They pick the easiest thing to get moving. That can be fine for a simple app. But agents and MCP push you into longer-lived backend requirements.

The key trade-off looks like this:

- Locked convenience: fast setup, strong defaults, but you inherit vendor-specific APIs, quotas, and migration risk.

- Open flexibility: a platform built on open standards or open source that keeps your exit options open, but you must still choose who operates it.

This is why open-source backends have gained attention again. Parse Server remains one of the core open-source backends in this category. Repository: https://github.com/parse-community/parse-server

Why “unlimited requests” matters more with agentic products

With classic apps, request volume grows roughly with user count. With agentic products, request volume can grow with workflow depth. One user action might kick off multiple tool calls, background jobs, and real-time updates.

That creates a very specific runway problem. You can be “successful” in engagement and still lose money if every interaction triggers expensive request patterns across multiple vendors.

This is where SashiDo - Parse Platform fits cleanly into the 2026 trend stack for founders. It is Parse-based, so you avoid lock-in at the platform level. It is managed, so you avoid building a DevOps function. And the pricing model is positioned around transparent usage instead of surprise caps that punish growth.

A realistic decision lens for founders and small teams

If you are choosing between a managed BaaS and an open-source-based platform, use a decision lens tied to your agent roadmap.

Ask:

- Will we need background execution for tool calls, retrieval, and scheduled runs within 90 days.

- Will we need real-time updates for long agent runs and collaborative workflows.

- Will we need audit logs and replay for user trust, enterprise deals, or compliance.

- Can we export our data and run our backend elsewhere if pricing or policies change.

If you are building mobile application development services for clients, these questions matter even more. Your client’s product will evolve, and you do not want to be stuck defending a backend choice that becomes expensive or limiting later.

Competitor reality check: the lock-in discussion is not abstract

In practice, vendor lock-in shows up when you try to do one of three things: move regions, change your auth stack, or migrate to a different database model. If your backend is deeply coupled to proprietary APIs, migration becomes a rewrite.

If you are considering Firebase for speed, it is worth comparing the long-term trade-offs. Here is a detailed comparison of why a Parse-based approach can be more flexible for teams that want an exit option later: https://www.sashido.io/en/sashido-vs-firebase

Supabase is another common pick for fast MVPs. If your roadmap includes agent state, real-time workflows, and portability concerns, compare the constraints and operational differences: https://www.sashido.io/en/sashido-vs-supabase

The point is not that one tool is “bad”. The point is that agents increase backend complexity, so the migration tax becomes real sooner.

Trend 5. AI engineering is getting serious about security, cost, and governance

Photo by Peter Conrad on Unsplash

The more agency you give a model, the more security becomes an engineering requirement, not a policy requirement.

In 2026, the best teams are building lightweight governance into the product itself. They log tool actions. They scope permissions. They treat prompts as deployable artifacts. They add rate limits and anomaly detection.

OWASP’s Top 10 for LLM applications captures the categories of failures teams are actually seeing, like prompt injection and excessive agency. It is not theoretical. It maps cleanly to real incidents and the “surprise behavior” that founders report after deploying agents. Source: https://owasp.org/www-project-top-10-for-large-language-model-applications/

A practical checklist for safe tool use

You do not need a massive security program to reduce risk. You need a few consistent engineering defaults.

- Treat every tool call as untrusted input and untrusted output. Validate what the agent sends and what the tool returns.

- Separate read tools from write tools. Give the agent read access broadly, write access narrowly.

- Add user-visible confirmations for irreversible actions, especially in early versions.

- Record an audit log of tool calls with timestamps, user ids, and the exact payload sent.

- Add budgets. Token budgets and tool-call budgets. When the budget is hit, fail safe.

This is also why the backend platform matters. The easiest place to enforce these rules is server-side, not in a client app.

Cost control is now part of your product architecture

AI-first founders often focus on model pricing per token, then get surprised by everything around it: retries, embeddings, retrieval calls, long context windows, and orchestration overhead.

A healthy way to think about it is to separate costs into three buckets:

- Inference cost. Tokens, context length, and tool-augmented reasoning loops.

- Backend cost. Storage, database operations, background jobs, and real-time connections.

- Integration cost. Third-party APIs and operational tooling.

Agents increase all three. So you want visibility and levers. Visibility means you can attribute cost to a user, a feature, or an agent workflow. Levers means you can cap, degrade gracefully, or switch providers.

This is also where founders start asking whether to self-host models. The right answer depends on your usage pattern, latency needs, and team skills. If your core value is a vertical workflow and your usage is spiky, APIs can be the pragmatic choice. If you have stable high volume and clear latency constraints, self-hosting can pay off, but only if you can operate it reliably.

Self-host vs API: a decision guide that matches runway reality

If you are deciding between self-hosted models and API providers, use this short guide.

Choose API providers when you need to ship fast, your usage is unpredictable, or you want to focus engineering on product workflows rather than GPU operations.

Consider self-hosting when your token volume is consistent, you can estimate concurrency, you have a strong reason to control latency, or you need on-prem or strict data residency.

No matter which route you take, your backend still needs to be stable and portable. You do not want your model choice to force a backend rewrite.

How these trends hit mobile teams specifically in 2026

The agent, MCP, and vibe coding shifts are happening across software, but mobile apps have a few extra constraints.

Mobile UX punishes latency. Users close the app fast. That pushes you toward real-time updates and background continuation. If an agent workflow takes 30 seconds, your app needs to show progress and allow users to return later without losing context.

Mobile teams also ship across platforms. Many startups pick React Native for app development because it compresses the team size and speeds iteration. But as soon as the product includes agentic workflows, you need a backend that can handle long-running tasks, auth, and state in a consistent way across iOS, Android, and web.

This is where a mobile app development company planning an AI roadmap must stop treating the backend as “just storage”. In 2026, the backend is where agent reliability lives.

When enterprise mobile app development enters the picture

Even if you are a small startup, enterprise mobile app development expectations can arrive early. The moment you sell into regulated industries or larger customers, you get questions about audit logs, data export, uptime, and how you prevent an agent from taking unsafe actions.

This is not the time to discover your backend cannot support detailed logging or granular permissions without heavy customization.

A managed Parse-based platform can be a sweet spot here. You keep an open-source foundation, and you get operational stability without building a platform team. That is the core appeal of SashiDo - Parse Platform for teams that want to move fast but still think about the next phase.

Distributed build reality: USA, India, and everywhere

A lot of AI-first founders work with a mobile application development company in USA for product design and initial architecture, then scale delivery with a mobile app development company India for ongoing implementation and QA. That can work well, but it makes backend clarity even more important.

When multiple teams touch the product, you want backend conventions that are simple, documented, and hard to misuse. Open, well-understood patterns reduce “tribal knowledge” risk.

Putting it together: an agent-ready backend blueprint that stays flexible

The article’s core thesis is that agentic tech, MCP, and vibe coding are not separate trends. They reinforce each other.

Vibe coding speeds up shipping, which increases the number of integrations. MCP standardizes how those integrations are exposed to models. Agents turn those integrations into multi-step workflows that must be safe, observable, and cost-controlled.

If you map that to architecture, you get a blueprint:

- Keep your backend portable and open where possible.

- Put tool execution and guardrails server-side.

- Store agent state as first-class data, not as chat transcripts.

- Make real-time updates a normal part of the UI for long workflows.

- Add budgets and audit logs early, before enterprise questions arrive.

A concrete “week 1 to week 6” plan for founders

If you are building an MVP right now, here is a realistic rollout that matches how teams actually ship.

In week 1 and 2, build the product loop and store agent runs and tool calls in your backend. Do not over-optimize prompts yet. Focus on making runs replayable and debuggable.

In week 3 and 4, introduce one MCP-style tool boundary, even if you are not fully standardizing yet. The goal is to stop hardcoding tool assumptions into the agent.

In week 5 and 6, add budgets, logging, and a safe write-policy. You will feel this pay off when the first “weird” agent behavior report arrives, because you will have evidence instead of guesses.

If you want a managed Parse hosting setup that is ready for agent state, real-time workflows, and future migration flexibility, you can explore SashiDo’s platform at https://www.sashido.io/.

Conclusion: what a mobile app development company should optimize for in 2026

In 2026, shipping AI features is not about having the cleverest prompt. It is about building a system where agents can act, fail safely, recover, and stay within budget. MCP is pushing the ecosystem toward more standardized tool contracts. Vibe coding is increasing shipping speed, which raises the importance of backend reliability and security.

For an AI-first founder, or a mobile app development company delivering modern mobile apps and web applications, the backend decision is now strategic. You need a platform that can hold agent state, scale without surprise limits, and keep you out of vendor lock-in when you inevitably change models, tooling, or cloud strategy.

Build your agent workflows on a backend you can evolve. Then keep your tools modular with MCP-style boundaries. And when you are ready to move from prototype to a stable product, start at https://www.sashido.io/ to run Parse Server in a managed, no-lock-in setup that scales with your MVP, supports modern AI development out of the box, and lets you ship faster without inheriting DevOps complexity.